Journey

A timeline outlining my academic and research trajectory, key milestones, and the progression of my research interests in robotics and embodied AI.

Academic Timeline

Key milestones in my academic and research journey

Research Assistant

Embodied AI Research

After giving up my guaranteed admission to a graduate program in Astronautics, I am currently working as a Research Assistant at SJTU MINT Lab on Embodied Intelligence (VLA).

First Bold Attempt

Guangzhou Xiangrui Technology Innovation Co., Ltd.

Building on my prior hands-on experience with UAVs and robotics at university, I once attempted to co-found a startup with classmates, although it ultimately did not succeed.

First Research Internship

Intelligent Robotics Research Center, NWPU

Special thanks to Prof. Ma for introducing me to Robot & 3D research and for his strong support at the beginning of my research.

First National-Level Award

YOLO, STM32, Qt

Participated in a national-level disciplinary competition for the first time and achieved 4th place among 88 finalist teams in the national finals, receiving the National First Prize.

First Research Project

Aviation Science and Technology Innovation Base

After a half-year evaluation, I was selected to join NWPU AMT, where I worked with the team to bring our self-developed unmanned aerial vehicles into flight.

Research Evolution

How my research interests have evolved over time

Early Stage

Hardware & Control

Started from hardware and electronics fundamentals, studying control theory, robotics, and microcontroller systems, building a strong theoretical foundation.

Growth Phase

3DV & Robotics

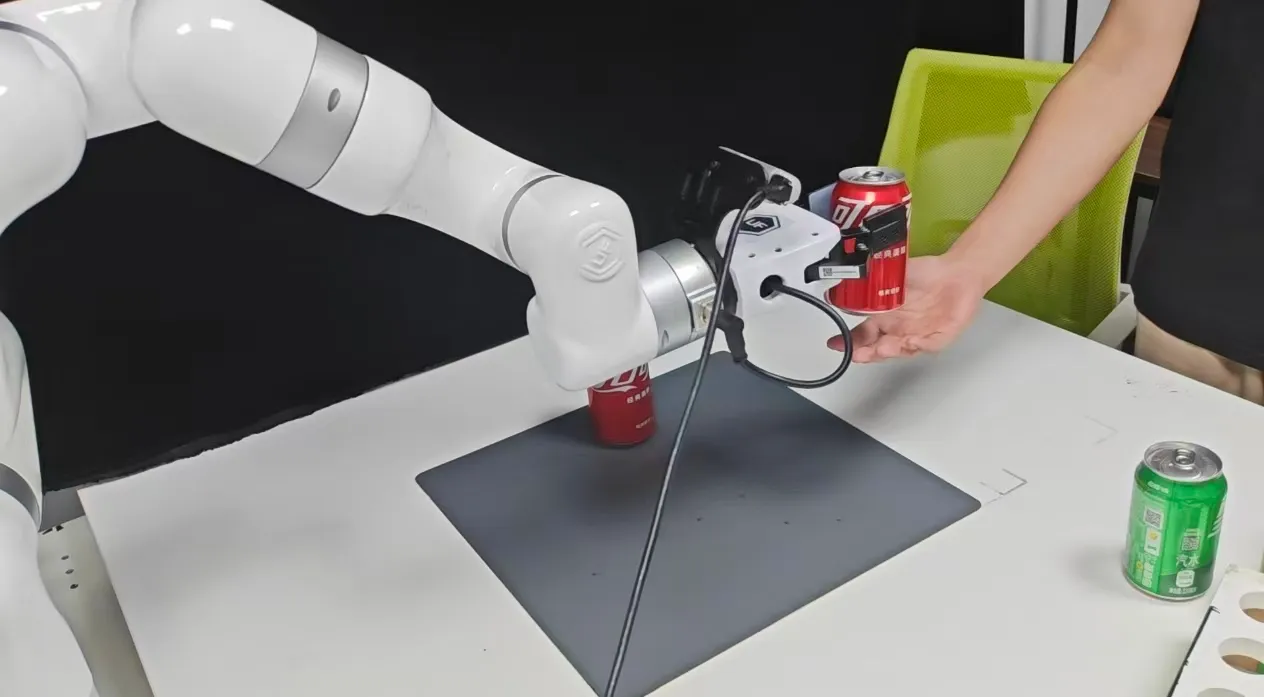

Transitioned to deep learning and computer vision, working on 6D pose estimation, digital twins, and real-world robot control.

Current Focus

Embodied AI

Now focused on Embodied AI, integrating 3D vision, control theory, and physical modalities to enable robotic systems to interact with the physical world more smoothly.

Reflections

Lessons learned and insights gained along the way

On the “V” Modality in VLA

"VLA inherits from VLM, whose vision capabilities are largely derived from understanding static images."

We may replace the vision encoder with a video encoder, or enhance contextual understanding in VLA to strengthen visual capabilities.

On the “L” Modality in VLA

"The language modality is a minor component of the VLA training data and provides negligible conditioning for downstream tasks."

We may boost the “L” modality via pseudo-labeling, or rethink the roles of “V” and “L” in action control beyond the VLM framework.

On world-model based methods

"We can enhance robotic action capabilities through future prediction, although such predictions are costly and not always accurate."

WM can be used solely as a reward model for RL in post-training, or as a high-level model providing low-frequency guidance. Occasional prediction errors are acceptable; the key is to efficiently extract useful supervisory signals from its outputs.

Interested in Collaboration?

I'm always open to discussing research ideas, potential collaborations, or just having a chat about AI and robotics.